import torch

import numpy as np

import matplotlib.pyplot as plt

from synthetic import simulate_lorenz_96

from models.clstm import cLSTM, train_model_ista1. cMLP, cRNN, cLSTM

- cMLP

- 완전연결 신견망(MLP) 기반

- 과거의 관측값을 입력으로 받아 비선형 함수를 통해 출력 생성

- 시계열 데이터에서, 시간적 정보는 고려하지 않고 독립적인 입력변수로 다룸

- 장점 : 계산량이 적고 단순

- 단점 : 시계열 특성 고려x(순서정보 반영하지 못함) \(\to\) 장기 의존성 학습이 어려움

- \(\hat{X}_{t+1}=f_{\theta}(X_t,X_{t-1},...,X_{t-k})\)

- \(f_{\theta}\) : MLP, k : 과거 시점 개수

- cRNN

- 순환 신경망(RNN)을 기반, 시간 의존성 반영할 수 있음

- 과거 데이터의 상태를 은닉 상태에 저장하여 학습

- 장점 : 시계열 표현 잘 반영

- 단점 : 장기 의존성을 학습하기는 어려움(Vanishing Gradient 문제)

- \(h_t=\sigma(W_th_{t-1}+W_xX_t)\)

- \(\hat{X}_{t+1}=f_{\theta}(h_t)\)

- \(h_t\) : 은닉 상태, \(W_t, W_x\) 는 가중치 행렬, \(\sigma\) 는 활성화 함수

- cLSTM

- 장기-단기 기억 네트워크를 기반으로 한 모델

- RNN 단점인 Vanishing Gradient 문제를 해결하여 장기 의존성 학습 가능

- 셀 상태와 게이트 구조를 사용하여 중요한 정보만 저장하고 불필요한 정보 삭제

- 단점 : 연산량이 많아 학습속도가 느릴 수 있음

- \(f_t = \sigma(W_f[h_{t-1},X_t]+b_f\)

- \(i_t = \sigma(W_i[h_{t-1},X_t]+b_i\)

- \(o_t = \sigma(W_o[h_{t-1},X_t]+b_o\)

- \(C_t=f_t \odot C_{t-1}+i_t \odot \tilde{C}_t\)

- \(h_t=o_t\odot tanh(C_t)\)

- \(f_t\) : forget gate, \(i_t\) : input gate, \(o_t\) : output gate, \(C_t\) : 셀 상태

Neural Granger Causality Github

import pandas as pd# For GPU acceleration

device = torch.device('cuda')- simulate_lorenz_96 함수는 Lorenz-96 모델을 시뮬레이션하여 데이터를 생성하는 함수

- 기상학과 동역학 시스템에서 사용되는 비선형 동적 시스템 모델

- 아래의 미분 방정식으로 정의

- \(\frac{dx_i}{dt}=(x_{i+1}-x_{i-2})x_{i-1}-x_i+F\)

- 비선형 시스템으로 복잡한 시간적 변화를 모사

- 기상 모델링과 같은 분야에서 사용

- 혼돈 현상을 나타낼 수 있음

- p : 시스템의 차원(변수 개수), T: 시뮬레이션 시간길이, F : 외부 힘(혼돈 유발 파라미터)

# Simulate data

#X_np, GC = simulate_lorenz_96(p=10, F=10, T=1000)

#X = torch.tensor(X_np[np.newaxis], dtype=torch.float32, device=device)wt = pd.read_csv("weather2024.csv")wt| 일시 | 기온 | 강수량 | 풍속 | 습도 | 일사 | |

|---|---|---|---|---|---|---|

| 0 | 2024-01-01 01:00 | 3.8 | 0.0 | 1.5 | 80 | 0.0 |

| 1 | 2024-01-01 02:00 | 3.9 | 0.0 | 0.2 | 79 | 0.0 |

| 2 | 2024-01-01 03:00 | 3.5 | 0.0 | 0.4 | 84 | 0.0 |

| 3 | 2024-01-01 04:00 | 1.9 | 0.0 | 1.1 | 92 | 0.0 |

| 4 | 2024-01-01 05:00 | 1.4 | 0.0 | 1.5 | 94 | 0.0 |

| ... | ... | ... | ... | ... | ... | ... |

| 8755 | 2024-12-30 20:00 | 7.6 | 0.0 | 1.4 | 71 | 0.0 |

| 8756 | 2024-12-30 21:00 | 7.5 | 0.0 | 1.7 | 69 | 0.0 |

| 8757 | 2024-12-30 22:00 | 7.2 | 0.0 | 1.2 | 70 | 0.0 |

| 8758 | 2024-12-30 23:00 | 7.2 | 0.0 | 1.7 | 71 | 0.0 |

| 8759 | 2024-12-31 00:00 | 7.4 | 0.0 | 2.8 | 70 | 0.0 |

8760 rows × 6 columns

- 정규화 \(\to\) numpy \(\to\) Torch.tensor + 차원추가

from sklearn.preprocessing import StandardScaler

X_np = wt.iloc[:, 1:].values

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X_np)

X_np = X_scaled.astype(np.float32)

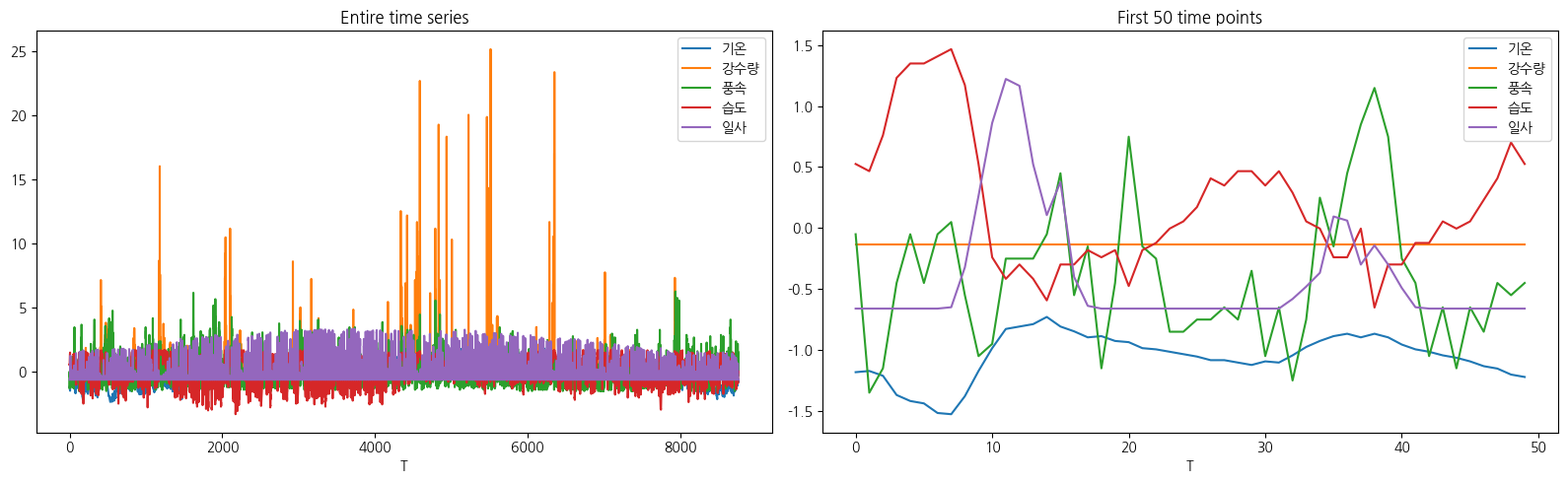

X = torch.tensor(X_np[np.newaxis], dtype=torch.float32, device=device)wt.columnsIndex(['일시', '기온', '강수량', '풍속', '습도', '일사'], dtype='object')fig, axarr = plt.subplots(1, 2, figsize=(16, 5))

# 전체 시계열 데이터 (기온, 강수량, 풍속, 습도)

axarr[0].plot(X_np)

axarr[0].set_xlabel('T')

axarr[0].set_title('Entire time series')

axarr[0].legend(wt.columns[1:]) # 범례 추가

# 처음 50개 타임스텝 데이터

axarr[1].plot(X_np[:50])

axarr[1].set_xlabel('T')

axarr[1].set_title('First 50 time points')

axarr[1].legend(wt.columns[1:]) # 범례 추가

# 레이아웃 정리 및 출력

plt.tight_layout()

plt.show()

- X.shape[-1] : 컬럼의 개수, 여기서는 5

- hidden : LSTM의 은닉 상태 크기

# Set up model

clstm = cLSTM(X.shape[-1], hidden=100).cuda(device=device)- context=n : 과거 n개의 타임스텝을 고려하여 학습

- lam, lam_ridge : 정규화 관련 하이퍼파라미터..그대로 둬도 무방

- lr : learning rate

- max_iter : 최대 몇 번의 반복동안 학습할지

- check_every : 모델 학습 과정에서 일정 간격마다 검증을 수행하는 주기

# Train with ISTA

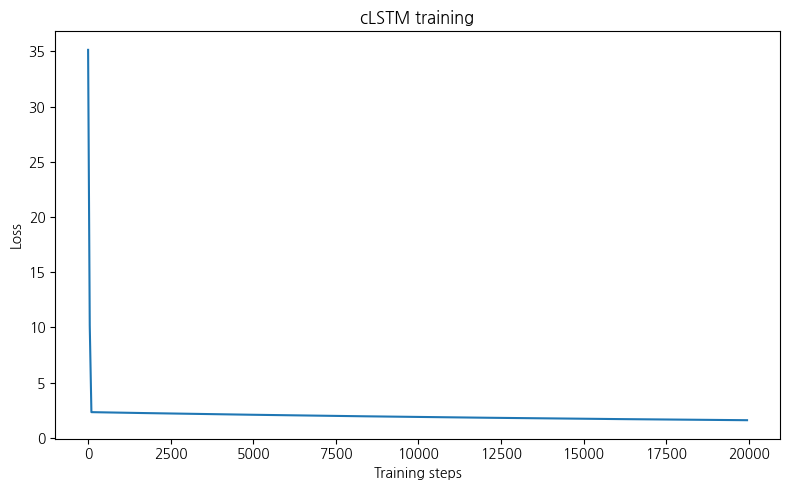

train_loss_list = train_model_ista(

clstm, X, context=10, lam=10.0, lam_ridge=1e-2, lr=1e-3, max_iter=20000,

check_every=50)----------Iter = 50----------

Loss = 35.149139

Variable usage = 100.00%

----------Iter = 100----------

Loss = 10.155972

Variable usage = 100.00%

----------Iter = 150----------

Loss = 2.331874

Variable usage = 0.00%

----------Iter = 200----------

Loss = 2.328956

Variable usage = 0.00%

----------Iter = 250----------

Loss = 2.326103

Variable usage = 0.00%

----------Iter = 300----------

Loss = 2.323302

Variable usage = 0.00%

----------Iter = 350----------

Loss = 2.320541

Variable usage = 0.00%

----------Iter = 400----------

Loss = 2.317814

Variable usage = 0.00%

----------Iter = 450----------

Loss = 2.315112

Variable usage = 0.00%

----------Iter = 500----------

Loss = 2.312431

Variable usage = 0.00%

----------Iter = 550----------

Loss = 2.309769

Variable usage = 0.00%

----------Iter = 600----------

Loss = 2.307121

Variable usage = 0.00%

----------Iter = 650----------

Loss = 2.304486

Variable usage = 0.00%

----------Iter = 700----------

Loss = 2.301862

Variable usage = 0.00%

----------Iter = 750----------

Loss = 2.299247

Variable usage = 0.00%

----------Iter = 800----------

Loss = 2.296641

Variable usage = 0.00%

----------Iter = 850----------

Loss = 2.294042

Variable usage = 0.00%

----------Iter = 900----------

Loss = 2.291451

Variable usage = 0.00%

----------Iter = 950----------

Loss = 2.288867

Variable usage = 0.00%

----------Iter = 1000----------

Loss = 2.286288

Variable usage = 0.00%

----------Iter = 1050----------

Loss = 2.283716

Variable usage = 0.00%

----------Iter = 1100----------

Loss = 2.281150

Variable usage = 0.00%

----------Iter = 1150----------

Loss = 2.278589

Variable usage = 0.00%

----------Iter = 1200----------

Loss = 2.276034

Variable usage = 0.00%

----------Iter = 1250----------

Loss = 2.273485

Variable usage = 0.00%

----------Iter = 1300----------

Loss = 2.270941

Variable usage = 0.00%

----------Iter = 1350----------

Loss = 2.268401

Variable usage = 0.00%

----------Iter = 1400----------

Loss = 2.265868

Variable usage = 0.00%

----------Iter = 1450----------

Loss = 2.263339

Variable usage = 0.00%

----------Iter = 1500----------

Loss = 2.260815

Variable usage = 0.00%

----------Iter = 1550----------

Loss = 2.258297

Variable usage = 0.00%

----------Iter = 1600----------

Loss = 2.255784

Variable usage = 0.00%

----------Iter = 1650----------

Loss = 2.253276

Variable usage = 0.00%

----------Iter = 1700----------

Loss = 2.250772

Variable usage = 0.00%

----------Iter = 1750----------

Loss = 2.248274

Variable usage = 0.00%

----------Iter = 1800----------

Loss = 2.245781

Variable usage = 0.00%

----------Iter = 1850----------

Loss = 2.243293

Variable usage = 0.00%

----------Iter = 1900----------

Loss = 2.240810

Variable usage = 0.00%

----------Iter = 1950----------

Loss = 2.238332

Variable usage = 0.00%

----------Iter = 2000----------

Loss = 2.235858

Variable usage = 0.00%

----------Iter = 2050----------

Loss = 2.233390

Variable usage = 0.00%

----------Iter = 2100----------

Loss = 2.230927

Variable usage = 0.00%

----------Iter = 2150----------

Loss = 2.228468

Variable usage = 0.00%

----------Iter = 2200----------

Loss = 2.226015

Variable usage = 0.00%

----------Iter = 2250----------

Loss = 2.223566

Variable usage = 0.00%

----------Iter = 2300----------

Loss = 2.221122

Variable usage = 0.00%

----------Iter = 2350----------

Loss = 2.218683

Variable usage = 0.00%

----------Iter = 2400----------

Loss = 2.216249

Variable usage = 0.00%

----------Iter = 2450----------

Loss = 2.213820

Variable usage = 0.00%

----------Iter = 2500----------

Loss = 2.211396

Variable usage = 0.00%

----------Iter = 2550----------

Loss = 2.208977

Variable usage = 0.00%

----------Iter = 2600----------

Loss = 2.206562

Variable usage = 0.00%

----------Iter = 2650----------

Loss = 2.204152

Variable usage = 0.00%

----------Iter = 2700----------

Loss = 2.201747

Variable usage = 0.00%

----------Iter = 2750----------

Loss = 2.199347

Variable usage = 0.00%

----------Iter = 2800----------

Loss = 2.196952

Variable usage = 0.00%

----------Iter = 2850----------

Loss = 2.194561

Variable usage = 0.00%

----------Iter = 2900----------

Loss = 2.192176

Variable usage = 0.00%

----------Iter = 2950----------

Loss = 2.189795

Variable usage = 0.00%

----------Iter = 3000----------

Loss = 2.187418

Variable usage = 0.00%

----------Iter = 3050----------

Loss = 2.185047

Variable usage = 0.00%

----------Iter = 3100----------

Loss = 2.182680

Variable usage = 0.00%

----------Iter = 3150----------

Loss = 2.180318

Variable usage = 0.00%

----------Iter = 3200----------

Loss = 2.177961

Variable usage = 0.00%

----------Iter = 3250----------

Loss = 2.175608

Variable usage = 0.00%

----------Iter = 3300----------

Loss = 2.173260

Variable usage = 0.00%

----------Iter = 3350----------

Loss = 2.170917

Variable usage = 0.00%

----------Iter = 3400----------

Loss = 2.168579

Variable usage = 0.00%

----------Iter = 3450----------

Loss = 2.166245

Variable usage = 0.00%

----------Iter = 3500----------

Loss = 2.163915

Variable usage = 0.00%

----------Iter = 3550----------

Loss = 2.161591

Variable usage = 0.00%

----------Iter = 3600----------

Loss = 2.159271

Variable usage = 0.00%

----------Iter = 3650----------

Loss = 2.156956

Variable usage = 0.00%

----------Iter = 3700----------

Loss = 2.154645

Variable usage = 0.00%

----------Iter = 3750----------

Loss = 2.152339

Variable usage = 0.00%

----------Iter = 3800----------

Loss = 2.150038

Variable usage = 0.00%

----------Iter = 3850----------

Loss = 2.147741

Variable usage = 0.00%

----------Iter = 3900----------

Loss = 2.145449

Variable usage = 0.00%

----------Iter = 3950----------

Loss = 2.143161

Variable usage = 0.00%

----------Iter = 4000----------

Loss = 2.140878

Variable usage = 0.00%

----------Iter = 4050----------

Loss = 2.138600

Variable usage = 0.00%

----------Iter = 4100----------

Loss = 2.136326

Variable usage = 0.00%

----------Iter = 4150----------

Loss = 2.134056

Variable usage = 0.00%

----------Iter = 4200----------

Loss = 2.131791

Variable usage = 0.00%

----------Iter = 4250----------

Loss = 2.129531

Variable usage = 0.00%

----------Iter = 4300----------

Loss = 2.127275

Variable usage = 0.00%

----------Iter = 4350----------

Loss = 2.125024

Variable usage = 0.00%

----------Iter = 4400----------

Loss = 2.122777

Variable usage = 0.00%

----------Iter = 4450----------

Loss = 2.120535

Variable usage = 0.00%

----------Iter = 4500----------

Loss = 2.118297

Variable usage = 0.00%

----------Iter = 4550----------

Loss = 2.116063

Variable usage = 0.00%

----------Iter = 4600----------

Loss = 2.113835

Variable usage = 0.00%

----------Iter = 4650----------

Loss = 2.111610

Variable usage = 0.00%

----------Iter = 4700----------

Loss = 2.109390

Variable usage = 0.00%

----------Iter = 4750----------

Loss = 2.107174

Variable usage = 0.00%

----------Iter = 4800----------

Loss = 2.104963

Variable usage = 0.00%

----------Iter = 4850----------

Loss = 2.102756

Variable usage = 0.00%

----------Iter = 4900----------

Loss = 2.100554

Variable usage = 0.00%

----------Iter = 4950----------

Loss = 2.098356

Variable usage = 0.00%

----------Iter = 5000----------

Loss = 2.096163

Variable usage = 0.00%

----------Iter = 5050----------

Loss = 2.093973

Variable usage = 0.00%

----------Iter = 5100----------

Loss = 2.091789

Variable usage = 0.00%

----------Iter = 5150----------

Loss = 2.089608

Variable usage = 0.00%

----------Iter = 5200----------

Loss = 2.087432

Variable usage = 0.00%

----------Iter = 5250----------

Loss = 2.085260

Variable usage = 0.00%

----------Iter = 5300----------

Loss = 2.083093

Variable usage = 0.00%

----------Iter = 5350----------

Loss = 2.080930

Variable usage = 0.00%

----------Iter = 5400----------

Loss = 2.078771

Variable usage = 0.00%

----------Iter = 5450----------

Loss = 2.076617

Variable usage = 0.00%

----------Iter = 5500----------

Loss = 2.074467

Variable usage = 0.00%

----------Iter = 5550----------

Loss = 2.072321

Variable usage = 0.00%

----------Iter = 5600----------

Loss = 2.070179

Variable usage = 0.00%

----------Iter = 5650----------

Loss = 2.068043

Variable usage = 0.00%

----------Iter = 5700----------

Loss = 2.065909

Variable usage = 0.00%

----------Iter = 5750----------

Loss = 2.063781

Variable usage = 0.00%

----------Iter = 5800----------

Loss = 2.061656

Variable usage = 0.00%

----------Iter = 5850----------

Loss = 2.059536

Variable usage = 0.00%

----------Iter = 5900----------

Loss = 2.057420

Variable usage = 0.00%

----------Iter = 5950----------

Loss = 2.055308

Variable usage = 0.00%

----------Iter = 6000----------

Loss = 2.053200

Variable usage = 0.00%

----------Iter = 6050----------

Loss = 2.051097

Variable usage = 0.00%

----------Iter = 6100----------

Loss = 2.048998

Variable usage = 0.00%

----------Iter = 6150----------

Loss = 2.046903

Variable usage = 0.00%

----------Iter = 6200----------

Loss = 2.044812

Variable usage = 0.00%

----------Iter = 6250----------

Loss = 2.042726

Variable usage = 0.00%

----------Iter = 6300----------

Loss = 2.040644

Variable usage = 0.00%

----------Iter = 6350----------

Loss = 2.038565

Variable usage = 0.00%

----------Iter = 6400----------

Loss = 2.036491

Variable usage = 0.00%

----------Iter = 6450----------

Loss = 2.034421

Variable usage = 0.00%

----------Iter = 6500----------

Loss = 2.032356

Variable usage = 0.00%

----------Iter = 6550----------

Loss = 2.030294

Variable usage = 0.00%

----------Iter = 6600----------

Loss = 2.028236

Variable usage = 0.00%

----------Iter = 6650----------

Loss = 2.026183

Variable usage = 0.00%

----------Iter = 6700----------

Loss = 2.024134

Variable usage = 0.00%

----------Iter = 6750----------

Loss = 2.022088

Variable usage = 0.00%

----------Iter = 6800----------

Loss = 2.020047

Variable usage = 0.00%

----------Iter = 6850----------

Loss = 2.018010

Variable usage = 0.00%

----------Iter = 6900----------

Loss = 2.015977

Variable usage = 0.00%

----------Iter = 6950----------

Loss = 2.013948

Variable usage = 0.00%

----------Iter = 7000----------

Loss = 2.011923

Variable usage = 0.00%

----------Iter = 7050----------

Loss = 2.009902

Variable usage = 0.00%

----------Iter = 7100----------

Loss = 2.007886

Variable usage = 0.00%

----------Iter = 7150----------

Loss = 2.005873

Variable usage = 0.00%

----------Iter = 7200----------

Loss = 2.003864

Variable usage = 0.00%

----------Iter = 7250----------

Loss = 2.001859

Variable usage = 0.00%

----------Iter = 7300----------

Loss = 1.999858

Variable usage = 0.00%

----------Iter = 7350----------

Loss = 1.997862

Variable usage = 0.00%

----------Iter = 7400----------

Loss = 1.995869

Variable usage = 0.00%

----------Iter = 7450----------

Loss = 1.993881

Variable usage = 0.00%

----------Iter = 7500----------

Loss = 1.991896

Variable usage = 0.00%

----------Iter = 7550----------

Loss = 1.989915

Variable usage = 0.00%

----------Iter = 7600----------

Loss = 1.987938

Variable usage = 0.00%

----------Iter = 7650----------

Loss = 1.985965

Variable usage = 0.00%

----------Iter = 7700----------

Loss = 1.983996

Variable usage = 0.00%

----------Iter = 7750----------

Loss = 1.982031

Variable usage = 0.00%

----------Iter = 7800----------

Loss = 1.980070

Variable usage = 0.00%

----------Iter = 7850----------

Loss = 1.978113

Variable usage = 0.00%

----------Iter = 7900----------

Loss = 1.976159

Variable usage = 0.00%

----------Iter = 7950----------

Loss = 1.974210

Variable usage = 0.00%

----------Iter = 8000----------

Loss = 1.972265

Variable usage = 0.00%

----------Iter = 8050----------

Loss = 1.970323

Variable usage = 0.00%

----------Iter = 8100----------

Loss = 1.968385

Variable usage = 0.00%

----------Iter = 8150----------

Loss = 1.966451

Variable usage = 0.00%

----------Iter = 8200----------

Loss = 1.964521

Variable usage = 0.00%

----------Iter = 8250----------

Loss = 1.962595

Variable usage = 0.00%

----------Iter = 8300----------

Loss = 1.960673

Variable usage = 0.00%

----------Iter = 8350----------

Loss = 1.958754

Variable usage = 0.00%

----------Iter = 8400----------

Loss = 1.956840

Variable usage = 0.00%

----------Iter = 8450----------

Loss = 1.954929

Variable usage = 0.00%

----------Iter = 8500----------

Loss = 1.953022

Variable usage = 0.00%

----------Iter = 8550----------

Loss = 1.951119

Variable usage = 0.00%

----------Iter = 8600----------

Loss = 1.949220

Variable usage = 0.00%

----------Iter = 8650----------

Loss = 1.947324

Variable usage = 0.00%

----------Iter = 8700----------

Loss = 1.945432

Variable usage = 0.00%

----------Iter = 8750----------

Loss = 1.943544

Variable usage = 0.00%

----------Iter = 8800----------

Loss = 1.941660

Variable usage = 0.00%

----------Iter = 8850----------

Loss = 1.939780

Variable usage = 0.00%

----------Iter = 8900----------

Loss = 1.937903

Variable usage = 0.00%

----------Iter = 8950----------

Loss = 1.936030

Variable usage = 0.00%

----------Iter = 9000----------

Loss = 1.934161

Variable usage = 0.00%

----------Iter = 9050----------

Loss = 1.932295

Variable usage = 0.00%

----------Iter = 9100----------

Loss = 1.930434

Variable usage = 0.00%

----------Iter = 9150----------

Loss = 1.928576

Variable usage = 0.00%

----------Iter = 9200----------

Loss = 1.926721

Variable usage = 0.00%

----------Iter = 9250----------

Loss = 1.924871

Variable usage = 0.00%

----------Iter = 9300----------

Loss = 1.923024

Variable usage = 0.00%

----------Iter = 9350----------

Loss = 1.921180

Variable usage = 0.00%

----------Iter = 9400----------

Loss = 1.919341

Variable usage = 0.00%

----------Iter = 9450----------

Loss = 1.917505

Variable usage = 0.00%

----------Iter = 9500----------

Loss = 1.915673

Variable usage = 0.00%

----------Iter = 9550----------

Loss = 1.913844

Variable usage = 0.00%

----------Iter = 9600----------

Loss = 1.912020

Variable usage = 0.00%

----------Iter = 9650----------

Loss = 1.910198

Variable usage = 0.00%

----------Iter = 9700----------

Loss = 1.908381

Variable usage = 0.00%

----------Iter = 9750----------

Loss = 1.906567

Variable usage = 0.00%

----------Iter = 9800----------

Loss = 1.904756

Variable usage = 0.00%

----------Iter = 9850----------

Loss = 1.902950

Variable usage = 0.00%

----------Iter = 9900----------

Loss = 1.901147

Variable usage = 0.00%

----------Iter = 9950----------

Loss = 1.899347

Variable usage = 0.00%

----------Iter = 10000----------

Loss = 1.897551

Variable usage = 0.00%

----------Iter = 10050----------

Loss = 1.895759

Variable usage = 0.00%

----------Iter = 10100----------

Loss = 1.893970

Variable usage = 0.00%

----------Iter = 10150----------

Loss = 1.892185

Variable usage = 0.00%

----------Iter = 10200----------

Loss = 1.890403

Variable usage = 0.00%

----------Iter = 10250----------

Loss = 1.888625

Variable usage = 0.00%

----------Iter = 10300----------

Loss = 1.886851

Variable usage = 0.00%

----------Iter = 10350----------

Loss = 1.885080

Variable usage = 0.00%

----------Iter = 10400----------

Loss = 1.883313

Variable usage = 0.00%

----------Iter = 10450----------

Loss = 1.881549

Variable usage = 0.00%

----------Iter = 10500----------

Loss = 1.879788

Variable usage = 0.00%

----------Iter = 10550----------

Loss = 1.878032

Variable usage = 0.00%

----------Iter = 10600----------

Loss = 1.876278

Variable usage = 0.00%

----------Iter = 10650----------

Loss = 1.874529

Variable usage = 0.00%

----------Iter = 10700----------

Loss = 1.872782

Variable usage = 0.00%

----------Iter = 10750----------

Loss = 1.871039

Variable usage = 0.00%

----------Iter = 10800----------

Loss = 1.869300

Variable usage = 0.00%

----------Iter = 10850----------

Loss = 1.867564

Variable usage = 0.00%

----------Iter = 10900----------

Loss = 1.865832

Variable usage = 0.00%

----------Iter = 10950----------

Loss = 1.864103

Variable usage = 0.00%

----------Iter = 11000----------

Loss = 1.862377

Variable usage = 0.00%

----------Iter = 11050----------

Loss = 1.860655

Variable usage = 0.00%

----------Iter = 11100----------

Loss = 1.858936

Variable usage = 0.00%

----------Iter = 11150----------

Loss = 1.857221

Variable usage = 0.00%

----------Iter = 11200----------

Loss = 1.855510

Variable usage = 0.00%

----------Iter = 11250----------

Loss = 1.853801

Variable usage = 0.00%

----------Iter = 11300----------

Loss = 1.852096

Variable usage = 0.00%

----------Iter = 11350----------

Loss = 1.850395

Variable usage = 0.00%

----------Iter = 11400----------

Loss = 1.848697

Variable usage = 0.00%

----------Iter = 11450----------

Loss = 1.847002

Variable usage = 0.00%

----------Iter = 11500----------

Loss = 1.845311

Variable usage = 0.00%

----------Iter = 11550----------

Loss = 1.843623

Variable usage = 0.00%

----------Iter = 11600----------

Loss = 1.841938

Variable usage = 0.00%

----------Iter = 11650----------

Loss = 1.840257

Variable usage = 0.00%

----------Iter = 11700----------

Loss = 1.838579

Variable usage = 0.00%

----------Iter = 11750----------

Loss = 1.836905

Variable usage = 0.00%

----------Iter = 11800----------

Loss = 1.835234

Variable usage = 0.00%

----------Iter = 11850----------

Loss = 1.833566

Variable usage = 0.00%

----------Iter = 11900----------

Loss = 1.831901

Variable usage = 0.00%

----------Iter = 11950----------

Loss = 1.830240

Variable usage = 0.00%

----------Iter = 12000----------

Loss = 1.828582

Variable usage = 0.00%

----------Iter = 12050----------

Loss = 1.826928

Variable usage = 0.00%

----------Iter = 12100----------

Loss = 1.825277

Variable usage = 0.00%

----------Iter = 12150----------

Loss = 1.823629

Variable usage = 0.00%

----------Iter = 12200----------

Loss = 1.821984

Variable usage = 0.00%

----------Iter = 12250----------

Loss = 1.820343

Variable usage = 0.00%

----------Iter = 12300----------

Loss = 1.818705

Variable usage = 0.00%

----------Iter = 12350----------

Loss = 1.817070

Variable usage = 0.00%

----------Iter = 12400----------

Loss = 1.815439

Variable usage = 0.00%

----------Iter = 12450----------

Loss = 1.813810

Variable usage = 0.00%

----------Iter = 12500----------

Loss = 1.812185

Variable usage = 0.00%

----------Iter = 12550----------

Loss = 1.810563

Variable usage = 0.00%

----------Iter = 12600----------

Loss = 1.808945

Variable usage = 0.00%

----------Iter = 12650----------

Loss = 1.807330

Variable usage = 0.00%

----------Iter = 12700----------

Loss = 1.805717

Variable usage = 0.00%

----------Iter = 12750----------

Loss = 1.804109

Variable usage = 0.00%

----------Iter = 12800----------

Loss = 1.802503

Variable usage = 0.00%

----------Iter = 12850----------

Loss = 1.800901

Variable usage = 0.00%

----------Iter = 12900----------

Loss = 1.799302

Variable usage = 0.00%

----------Iter = 12950----------

Loss = 1.797706

Variable usage = 0.00%

----------Iter = 13000----------

Loss = 1.796113

Variable usage = 0.00%

----------Iter = 13050----------

Loss = 1.794523

Variable usage = 0.00%

----------Iter = 13100----------

Loss = 1.792937

Variable usage = 0.00%

----------Iter = 13150----------

Loss = 1.791353

Variable usage = 0.00%

----------Iter = 13200----------

Loss = 1.789773

Variable usage = 0.00%

----------Iter = 13250----------

Loss = 1.788196

Variable usage = 0.00%

----------Iter = 13300----------

Loss = 1.786622

Variable usage = 0.00%

----------Iter = 13350----------

Loss = 1.785052

Variable usage = 0.00%

----------Iter = 13400----------

Loss = 1.783484

Variable usage = 0.00%

----------Iter = 13450----------

Loss = 1.781920

Variable usage = 0.00%

----------Iter = 13500----------

Loss = 1.780359

Variable usage = 0.00%

----------Iter = 13550----------

Loss = 1.778800

Variable usage = 0.00%

----------Iter = 13600----------

Loss = 1.777245

Variable usage = 0.00%

----------Iter = 13650----------

Loss = 1.775693

Variable usage = 0.00%

----------Iter = 13700----------

Loss = 1.774144

Variable usage = 0.00%

----------Iter = 13750----------

Loss = 1.772599

Variable usage = 0.00%

----------Iter = 13800----------

Loss = 1.771056

Variable usage = 0.00%

----------Iter = 13850----------

Loss = 1.769517

Variable usage = 0.00%

----------Iter = 13900----------

Loss = 1.767980

Variable usage = 0.00%

----------Iter = 13950----------

Loss = 1.766447

Variable usage = 0.00%

----------Iter = 14000----------

Loss = 1.764916

Variable usage = 0.00%

----------Iter = 14050----------

Loss = 1.763389

Variable usage = 0.00%

----------Iter = 14100----------

Loss = 1.761865

Variable usage = 0.00%

----------Iter = 14150----------

Loss = 1.760344

Variable usage = 0.00%

----------Iter = 14200----------

Loss = 1.758825

Variable usage = 0.00%

----------Iter = 14250----------

Loss = 1.757310

Variable usage = 0.00%

----------Iter = 14300----------

Loss = 1.755798

Variable usage = 0.00%

----------Iter = 14350----------

Loss = 1.754289

Variable usage = 0.00%

----------Iter = 14400----------

Loss = 1.752783

Variable usage = 0.00%

----------Iter = 14450----------

Loss = 1.751280

Variable usage = 0.00%

----------Iter = 14500----------

Loss = 1.749780

Variable usage = 0.00%

----------Iter = 14550----------

Loss = 1.748283

Variable usage = 0.00%

----------Iter = 14600----------

Loss = 1.746789

Variable usage = 0.00%

----------Iter = 14650----------

Loss = 1.745298

Variable usage = 0.00%

----------Iter = 14700----------

Loss = 1.743810

Variable usage = 0.00%

----------Iter = 14750----------

Loss = 1.742324

Variable usage = 0.00%

----------Iter = 14800----------

Loss = 1.740842

Variable usage = 0.00%

----------Iter = 14850----------

Loss = 1.739363

Variable usage = 0.00%

----------Iter = 14900----------

Loss = 1.737887

Variable usage = 0.00%

----------Iter = 14950----------

Loss = 1.736413

Variable usage = 0.00%

----------Iter = 15000----------

Loss = 1.734943

Variable usage = 0.00%

----------Iter = 15050----------

Loss = 1.733476

Variable usage = 0.00%

----------Iter = 15100----------

Loss = 1.732011

Variable usage = 0.00%

----------Iter = 15150----------

Loss = 1.730550

Variable usage = 0.00%

----------Iter = 15200----------

Loss = 1.729091

Variable usage = 0.00%

----------Iter = 15250----------

Loss = 1.727635

Variable usage = 0.00%

----------Iter = 15300----------

Loss = 1.726182

Variable usage = 0.00%

----------Iter = 15350----------

Loss = 1.724733

Variable usage = 0.00%

----------Iter = 15400----------

Loss = 1.723285

Variable usage = 0.00%

----------Iter = 15450----------

Loss = 1.721841

Variable usage = 0.00%

----------Iter = 15500----------

Loss = 1.720400

Variable usage = 0.00%

----------Iter = 15550----------

Loss = 1.718962

Variable usage = 0.00%

----------Iter = 15600----------

Loss = 1.717526

Variable usage = 0.00%

----------Iter = 15650----------

Loss = 1.716094

Variable usage = 0.00%

----------Iter = 15700----------

Loss = 1.714664

Variable usage = 0.00%

----------Iter = 15750----------

Loss = 1.713237

Variable usage = 0.00%

----------Iter = 15800----------

Loss = 1.711813

Variable usage = 0.00%

----------Iter = 15850----------

Loss = 1.710392

Variable usage = 0.00%

----------Iter = 15900----------

Loss = 1.708973

Variable usage = 0.00%

----------Iter = 15950----------

Loss = 1.707558

Variable usage = 0.00%

----------Iter = 16000----------

Loss = 1.706145

Variable usage = 0.00%

----------Iter = 16050----------

Loss = 1.704735

Variable usage = 0.00%

----------Iter = 16100----------

Loss = 1.703328

Variable usage = 0.00%

----------Iter = 16150----------

Loss = 1.701924

Variable usage = 0.00%

----------Iter = 16200----------

Loss = 1.700522

Variable usage = 0.00%

----------Iter = 16250----------

Loss = 1.699124

Variable usage = 0.00%

----------Iter = 16300----------

Loss = 1.697728

Variable usage = 0.00%

----------Iter = 16350----------

Loss = 1.696335

Variable usage = 0.00%

----------Iter = 16400----------

Loss = 1.694945

Variable usage = 0.00%

----------Iter = 16450----------

Loss = 1.693557

Variable usage = 0.00%

----------Iter = 16500----------

Loss = 1.692172

Variable usage = 0.00%

----------Iter = 16550----------

Loss = 1.690791

Variable usage = 0.00%

----------Iter = 16600----------

Loss = 1.689411

Variable usage = 0.00%

----------Iter = 16650----------

Loss = 1.688035

Variable usage = 0.00%

----------Iter = 16700----------

Loss = 1.686661

Variable usage = 0.00%

----------Iter = 16750----------

Loss = 1.685290

Variable usage = 0.00%

----------Iter = 16800----------

Loss = 1.683922

Variable usage = 0.00%

----------Iter = 16850----------

Loss = 1.682557

Variable usage = 0.00%

----------Iter = 16900----------

Loss = 1.681194

Variable usage = 0.00%

----------Iter = 16950----------

Loss = 1.679834

Variable usage = 0.00%

----------Iter = 17000----------

Loss = 1.678477

Variable usage = 0.00%

----------Iter = 17050----------

Loss = 1.677122

Variable usage = 0.00%

----------Iter = 17100----------

Loss = 1.675770

Variable usage = 0.00%

----------Iter = 17150----------

Loss = 1.674421

Variable usage = 0.00%

----------Iter = 17200----------

Loss = 1.673074

Variable usage = 0.00%

----------Iter = 17250----------

Loss = 1.671730

Variable usage = 0.00%

----------Iter = 17300----------

Loss = 1.670389

Variable usage = 0.00%

----------Iter = 17350----------

Loss = 1.669051

Variable usage = 0.00%

----------Iter = 17400----------

Loss = 1.667715

Variable usage = 0.00%

----------Iter = 17450----------

Loss = 1.666382

Variable usage = 0.00%

----------Iter = 17500----------

Loss = 1.665052

Variable usage = 0.00%

----------Iter = 17550----------

Loss = 1.663724

Variable usage = 0.00%

----------Iter = 17600----------

Loss = 1.662399

Variable usage = 0.00%

----------Iter = 17650----------

Loss = 1.661076

Variable usage = 0.00%

----------Iter = 17700----------

Loss = 1.659757

Variable usage = 0.00%

----------Iter = 17750----------

Loss = 1.658439

Variable usage = 0.00%

----------Iter = 17800----------

Loss = 1.657125

Variable usage = 0.00%

----------Iter = 17850----------

Loss = 1.655813

Variable usage = 0.00%

----------Iter = 17900----------

Loss = 1.654503

Variable usage = 0.00%

----------Iter = 17950----------

Loss = 1.653197

Variable usage = 0.00%

----------Iter = 18000----------

Loss = 1.651893

Variable usage = 0.00%

----------Iter = 18050----------

Loss = 1.650591

Variable usage = 0.00%

----------Iter = 18100----------

Loss = 1.649292

Variable usage = 0.00%

----------Iter = 18150----------

Loss = 1.647996

Variable usage = 0.00%

----------Iter = 18200----------

Loss = 1.646703

Variable usage = 0.00%

----------Iter = 18250----------

Loss = 1.645411

Variable usage = 0.00%

----------Iter = 18300----------

Loss = 1.644123

Variable usage = 0.00%

----------Iter = 18350----------

Loss = 1.642837

Variable usage = 0.00%

----------Iter = 18400----------

Loss = 1.641553

Variable usage = 0.00%

----------Iter = 18450----------

Loss = 1.640272

Variable usage = 0.00%

----------Iter = 18500----------

Loss = 1.638994

Variable usage = 0.00%

----------Iter = 18550----------

Loss = 1.637719

Variable usage = 0.00%

----------Iter = 18600----------

Loss = 1.636446

Variable usage = 0.00%

----------Iter = 18650----------

Loss = 1.635175

Variable usage = 0.00%

----------Iter = 18700----------

Loss = 1.633907

Variable usage = 0.00%

----------Iter = 18750----------

Loss = 1.632641

Variable usage = 0.00%

----------Iter = 18800----------

Loss = 1.631378

Variable usage = 0.00%

----------Iter = 18850----------

Loss = 1.630118

Variable usage = 0.00%

----------Iter = 18900----------

Loss = 1.628860

Variable usage = 0.00%

----------Iter = 18950----------

Loss = 1.627604

Variable usage = 0.00%

----------Iter = 19000----------

Loss = 1.626351

Variable usage = 0.00%

----------Iter = 19050----------

Loss = 1.625101

Variable usage = 0.00%

----------Iter = 19100----------

Loss = 1.623853

Variable usage = 0.00%

----------Iter = 19150----------

Loss = 1.622608

Variable usage = 0.00%

----------Iter = 19200----------

Loss = 1.621365

Variable usage = 0.00%

----------Iter = 19250----------

Loss = 1.620124

Variable usage = 0.00%

----------Iter = 19300----------

Loss = 1.618886

Variable usage = 0.00%

----------Iter = 19350----------

Loss = 1.617651

Variable usage = 0.00%

----------Iter = 19600----------

Loss = 1.611510

Variable usage = 0.00%

----------Iter = 19650----------

Loss = 1.610289

Variable usage = 0.00%

----------Iter = 19700----------

Loss = 1.609071

Variable usage = 0.00%

----------Iter = 19750----------

Loss = 1.607855

Variable usage = 0.00%

----------Iter = 19800----------

Loss = 1.606641

Variable usage = 0.00%

----------Iter = 19850----------

Loss = 1.605430

Variable usage = 0.00%

----------Iter = 19950----------

Loss = 1.603015

Variable usage = 0.00%

----------Iter = 20000----------

Loss = 1.601812

Variable usage = 0.00%- 이부분에서 데이터가 cuda 의 텐서여서 오류가 발생해 cpu, numpy로 변환

train_loss_np = np.array([loss.cpu().item() for loss in train_loss_list])# Loss function plot

plt.figure(figsize=(8, 5))

plt.plot(50 * np.arange(len(train_loss_np)), train_loss_np)

plt.title('cLSTM training')

plt.ylabel('Loss')

plt.xlabel('Training steps')

plt.tight_layout()

plt.show()

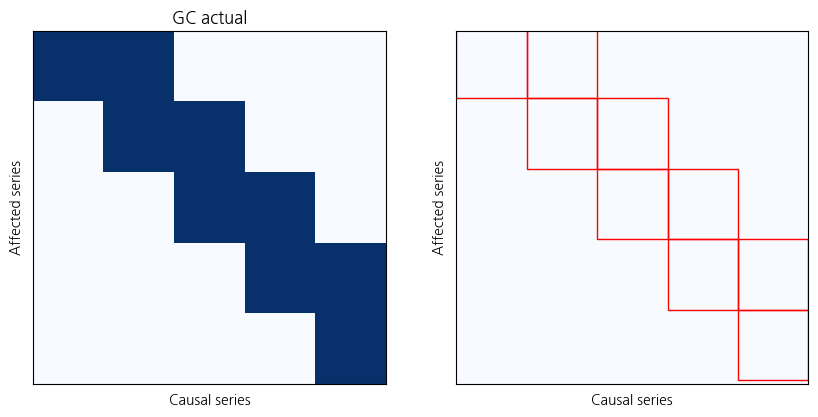

# 5개의 기상 변수 (기온, 강수량, 풍속, 습도, 일사)에 대한 GC 행렬

GC = np.array([[1, 1, 0, 0, 0], # 기온 -> 강수량

[0, 1, 1, 0, 0], # 강수량 -> 풍속

[0, 0, 1, 1, 0], # 풍속 -> 습도

[0, 0, 0, 1, 1], # 습도 -> 일사

[0, 0, 0, 0, 1]]) # 일사 -> 기온 (self loop)# Check learned Granger causality

GC_est = clstm.GC().cpu().data.numpy()

print('True variable usage = %.2f%%' % (100 * np.mean(GC)))

print('Estimated variable usage = %.2f%%' % (100 * np.mean(GC_est)))

print('Accuracy = %.2f%%' % (100 * np.mean(GC == GC_est)))

# Make figures

fig, axarr = plt.subplots(1, 2, figsize=(10, 5))

axarr[0].imshow(GC, cmap='Blues')

axarr[0].set_title('GC actual')

axarr[0].set_ylabel('Affected series')

axarr[0].set_xlabel('Causal series')

axarr[0].set_xticks([])

axarr[0].set_yticks([])

axarr[1].imshow(GC_est, cmap='Blues', vmin=0, vmax=1, extent=(0, len(GC_est), len(GC_est), 0))

axarr[1].set_ylabel('Affected series')

axarr[1].set_xlabel('Causal series')

axarr[1].set_xticks([])

axarr[1].set_yticks([])

# Mark disagreements

for i in range(len(GC_est)):

for j in range(len(GC_est)):

if GC[i, j] != GC_est[i, j]:

rect = plt.Rectangle((j, i-0.05), 1, 1, facecolor='none', edgecolor='red', linewidth=1)

axarr[1].add_patch(rect)

plt.show()True variable usage = 36.00%

Estimated variable usage = 0.00%

Accuracy = 64.00%

- Granger Causality

순서 : (기온 강수량 풍속 습도 일사)

GC 실제 변수 간의 인과 관계, GC_est 학습된 모델이 추정한 인과 관계

실제 GC와 추정된 GC_est를 비교하여 정확도(accuracy) 계산

불일치하는 인과관계는 빨간색으로 표시

실제 GC를 어떻게 설정해야하지…..

왜이렇게 많이 틀렸지?? 뭔가 잘못 됐나